EMA Upgrades xEVMPD Web Interface

AI Compliance in Pharma: EU, US & UK Legislative Insights

30 Jul, 2025

The future of pharmaceuticals is inextricably linked with technology and subsequently artificial intelligence, with its tools, platform, products/services. For pharmaceutical companies to thrive in this new era, a proactive and strategic approach is essential to stay ahead of the curve in line with legislation that offers challenges as well as opportunities, definitions, and frameworks.

While the implementation of AI comes with its challenges, it’s important to understand the quickly evolving legislative landscape to be prepared:

- Assess Your AI Readiness: Evaluate your internal capabilities, data infrastructure, and existing processes to identify areas where AI can create the most value and where challenges might arise.

- Understand the Regulatory Landscape: Develop a deep understanding of AI regulations in all markets where you operate or plan to operate. This includes not just general AI laws but also specific guidance for AI in medical products.

- Prioritize Ethical AI Development: Implement robust ethical guidelines and bias mitigation strategies from the outset. Human oversight, transparency, and data privacy should be core tenets of your AI development.

- Embrace Collaboration: Engage with regulatory bodies, industry consortia, and technology partners to stay ahead of the curve and contribute to the evolution of best practices.

- Invest in Talent & Training: Equip your workforce with the skills needed to develop, implement, and manage AI technologies responsibly and effectively.

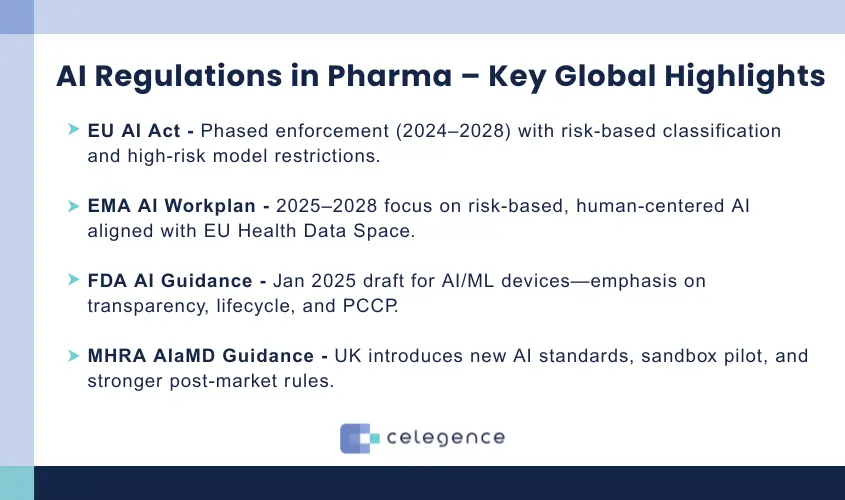

AI Legislation and regulatory updates in the European union:

The EU AI act:

The European union AI act is one of the first comprehensive legislation aimed at regulating the use of AI globally. The act defines how AI is to be governed, implemented, and monitored across member states of the EU. It establishes a framework for operations and deployment/implementation, as well as a standard process for single-purpose AI (SPAI) systems for market entry, operational activity, and overall ensuring a comprehensive understanding and approach to AI in the EU.

The Act implicates AI providers, defined as those who develop AI systems, tools, and models, importers and distributors of AI to the EU; as well as entities using high-risk AI systems to conduct their business; and manufactures that place AI products on the EU market.

- Entered into force on August 1, 2024. To be fully enforced in August 2026.

- Prohibition on AI models deemed to have unacceptable risk to be enforced in February 2025.

- General-purpose AI model governance rules will be enforced February 2026.

- Regulation for AI systems in regulated products in February 2028.

- There are ongoing proposals and discussions to potentially pause the implementation of the EU AI Act to allow industry to be compliant-ready and assess their use of AI, especially as it concerns high-risk AI models.

- The EU AI Act classifies AI systems into 4 risk level categories:

- Minimal

- Limited

- High

- Unacceptable

EMA AI Workplan:

- EMA and the Heads of Medicines Agencies (HMA) published a joint 2025-2028 work plan in May 2025. The workplan aims to improve data access, sharing and analysis to facilitate decision-making.

- The workplan aligns with the European Health Data Space and the EU AI Act.

- The EMA issued a final reflection paper on the use of AI in the medicinal product lifecycle in September 2024, the paper focuses on a risk-based and human-centered approach to the use of AI in the medicines, as well as clarifies the responsibilities of Marketing Authorization Holders (MAHs), applicants, clinical trial sponsors, and manufacturers

AI Legislation and Regulatory Updates in the European Union:

FDA AI/Machine-Learning Devices Guidance

The US FDA issued a draft guidance on Jan 6, 2025, for developers of AI-enabled medical devices. The guidance provides recommendations for the design, development, and documentation of AI-enabled devices throughout their lifecycle. Transparency, model bias, and post-market performance are some of the aspects detailed in the guidance

Overall, the FDA defines and emphasizes a predetermined change control plan (PCCP) for all AI-enabled devices. PCCPs aim to manage iterative improvements and changes to algorithms post-submission and approval (i.e. Variation eCTD submissions)

National and State Level Legislation

The US executive order 14179, “Removing Barriers to American Leadership in Artificial Intelligence,” and the executive order “Advancing United States Leadership in Artificial Intelligence Infrastructure” were both signed in Jan 2025. These orders focus on establishing AI-infrastructure in the US and promoting aspects of economic and human flourishing while reducing risks to national security.

In 2025, all 50 states of the US passed AI legislations: covering aspects such as requirements for critical infrastructure controlled by AI and addressing transparency regarding automated decisions made by AI tools, and worker protection considering AI developments.

AI Legislation and Regulatory Updates for the Health Sector in the UK (MHRA):

- AI as a medical device (AIaMD) MHRA guidance: The MHRA accepts all 15 recommendations in the report by the Regulatory Horizons Council (RHC), addressing the regulation of AI as a medical device (AIaMD). The MHRA is working to develop regulations that address these recommendations by the RHC, focusing on standards and guidance while building existing legislation.

- Regulatory Sandbox (AI airlock): To pilot regulatory challenges and solutions in a sandbox for AI models and software as a medical device. The sandbox was created in collaboration with the NHS. The pilot was expected to be completed by spring 2025.

- Post-Market Surveillance: The UK is strengthening post-market surveillance aspects of medical device regulations, with new legislation taking effect in June 2025, increasing obligations and clarity on data gathering post-market, which could have an impact on AIaMD.

Global Regulatory Outlook

Artificial intelligence (AI) is rapidly transforming the pharmaceutical industry, requiring organizations to adopt strategic, future-ready approaches that align with evolving global regulations. Key priorities include assessing AI readiness, understanding the legal landscape, ensuring ethical development, fostering collaboration, and building internal AI capabilities.

- European Union

- EU AI Act: A comprehensive legislative framework categorizing AI systems by risk (minimal to unacceptable). It impacts developers, importers, distributors, and users of high-risk AI and will be enforced in stages from 2025 to 2028.

- EMA AI Workplan (2025–2028): Aligns with the EU AI Act and European Health Data Space, promoting better data access and a risk-based, human-centered AI approach throughout the medicinal product lifecycle.

- United States

- FDA Guidance (Jan 2025): Draft guidance for AI-enabled medical devices covering development, transparency, bias mitigation, and lifecycle management through a predetermined change control plan (PCCP).

- Federal & State Legislation: Executive orders and legislation across all 50 states emphasize AI infrastructure, security, transparency, and workforce protection.

- United Kingdom

- MHRA AIaMD Regulation: Incorporates recommendations to regulate AI as a medical device, with new standards under development.

- Regulatory Sandbox: A pilot initiative with the NHS to test AI models and address regulatory challenges.

- Post-Market Surveillance: New rules effective June 2025 will enhance oversight and data collection for AI-integrated medical products.

Proactive alignment with these frameworks, along with a focus on ethics and cross-sector engagement, is essential to leveraging AI effectively and responsibly in the pharmaceutical sector.

How Celegence Can Support

Celegence offers regulatory expertise and AI-enabled solutions to help pharmaceutical and medical device companies stay compliant with evolving AI legislation. Our team supports clients with strategy development, documentation, risk assessments, and implementation of AI in regulated environments. With deep knowledge of the EU AI Act, FDA guidance, and MHRA expectations, we help you align your AI systems with global regulatory frameworks—efficiently and responsibly.

Explore Celegence’s AI-driven solutions today, reach out to us or email info@celegence.com to get started.

Other Related Articles

06 Feb, 2026

16 Dec, 2025

02 Dec, 2025

11 Nov, 2025